Python AI / LLM Tracing

Learn how to set up highlight.io tracing for common Python AI / LLM libraries to automatically instrument model training, inference, and evaluation.

Supported Python libraries

highlight.io supports tracing AI / LLM operation using OpenLLMetry. Supported libraries include:

Anthropic, Bedrock (AWS), ChromaDB, Cohere, Haystack, Langchain, LlamaIndex, OpenAI (Azure), Pinecone, Qdrant, Replicate, Transformers (Hugging Face), VertexAI (GCP), WatsonX (IBM Watsonx AI), Weaviate

# install and use your library in your code

pip install openai

pip install llamaindexInstall the highlight-io python package.

Download the package from pypi and save it to your requirements. If you use a zip or s3 file upload to publish your function, you will want to make sure highlight-io is part of the build.

poetry add highlight-io# or with pip

pip install highlight-ioInitialize the Highlight SDK for your respective framework.

Setup the SDK. Supported libraries will be instrumented automatically.

import highlight_io

# `instrument_logging=True` sets up logging instrumentation.

# if you do not want to send logs or are using `loguru`, pass `instrument_logging=False`

H = highlight_io.H(

"<YOUR_PROJECT_ID>",

instrument_logging=True,

service_name="my-app",

service_version="git-sha",

environment="production",

)

Instrument your code.

Setup a endpoint or function with HTTP trigger that utilizes the library you are trying to test. For example, if you are testing the requests library, you can setup a function that makes a request to a public API.

from openai import OpenAI

import highlight_io

from highlight_io.integrations.flask import FlaskIntegration

# `instrument_logging=True` sets up logging instrumentation.

# if you do not want to send logs or are using `loguru`, pass `instrument_logging=False`

H = highlight_io.H(

"<YOUR_PROJECT_ID>",

instrument_logging=True,

service_name="my-app",

service_version="git-sha",

environment="production",

)

client = OpenAI()

chat_history = [

{"role": "system", "content": "You are a helpful assistant."},

]

@highlight_io.trace

def complete(message: str) -> str:

chat_history.append({"role": "user", "content": message})

completion = client.chat.completions.create(

model="gpt-4-turbo",

messages=chat_history,

)

chat_history.append(

{"role": "assistant", "content": completion.choices[0].message.content}

)

return completion.choices[0].message.content

def main():

print(complete("What is the capital of the United States?"))

if __name__ == "__main__":

main()

H.flush()

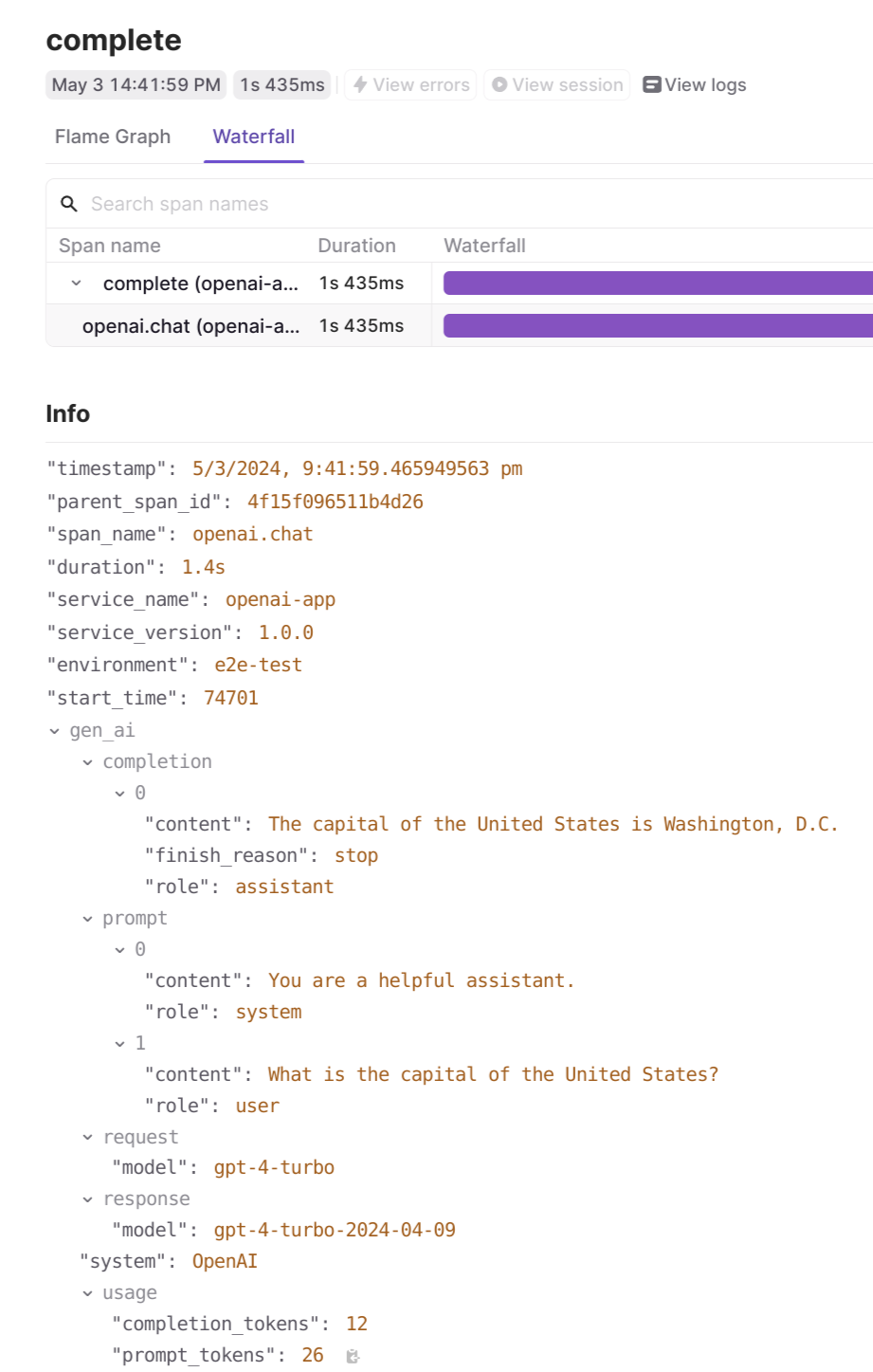

Verify your backend traces are being recorded.

Visit the highlight traces portal and check that backend traces are coming in.